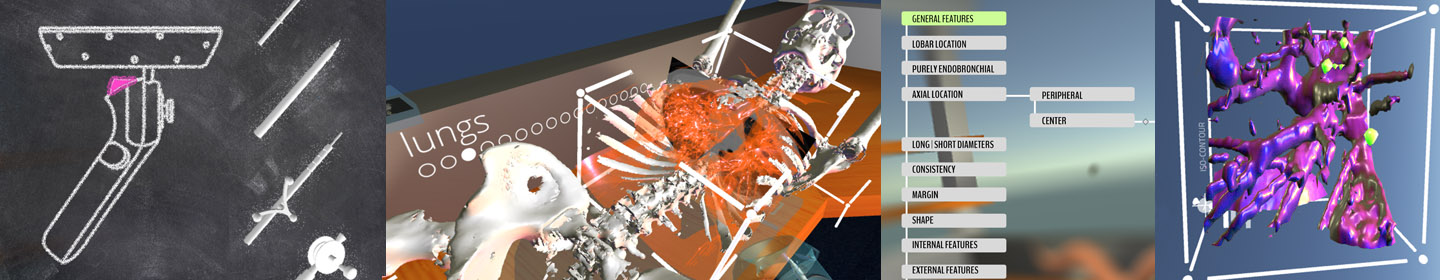

OVS+TUMOR

OVRAS | Open Visualization Space + TUMOR ANNOTATION

| santiago LOMBEYDA | ashish MAHABAL | daniel CRICHTON | heather KINCAID | george DJORGOVSKI | christos PATRIOTIS | sudhir SRIVASTAVA |

| Caltech/ArtCenter | Caltech | JPL | JPL | Caltech | NCI | NCI |

Our main goal in the creation of OVS has been to generate a viable work space in virtual reality; that allows scientists to exploit the benefits of immersion, while maintaining a strong sense of presence, and seamlessly allowing interaction with 3D data representations.

We have accomplished so by:

OVS+Tumor utilized our innovative OVS space to partner with JPL and the National Cancer Institute to aid in the task of marking candidate tumors, from actual 3D CT-Scans, so that they may be used as a training data set, in the larger endeavor of creating a fast and reliable classification tool for masses found through radiology.

We have found that the ability to pinpoint these candidate tumors through OVS reduces the level of expertise currently needed to interpret individual 2D scans; allowing a wider set of personnel to collaborate in the creation of a viable training data set, from which we can then apply machine learning techniques to better our ability to classify, recognize, and ultimately treat tumors.

_ meshplease_ library

ashish MAHABAL

daniel CRICHTON

heather KINCAID

george DJORGOVSKI

christos PATRIOTIS

sudhir SRIVASTAVA

_ jim BARRY

_ thomsom lab | CALTECH

_ with support from NSF

_ with support from CZI

_ with support from CALTECH STUDENT AFFAIRS

_ with donations from HTC

_ with donations from MICROSOFT

_ with donations from NVIDIA

_ with donations from LOGITECH

PROJECT BORN from CALTECH's OVRAS LAB

_ utilizing STEAMVR

_ utilizing ZEN FULCRUM BROWSER