FEATURING OVS+TUMOR from SIGGRAPH'2019

FEATURING OVS+TUMOR from SIGGRAPH'2019

|

The original goal of our Open Virtual Reality for Art and Science (OVRAS) at Caltech (formerly Open Virtual Reality Art Spaces), was to explore novel immersive interaction paradigms and develop tools for artistic creation and modeling using new consumer hardware right before it becomes ubiquitous. This includes, but is not restricted to, adaptations of classic drawing and painting styles. To enable such exploration, we established a working space enabled with VR capabilities, accessible to undergraduate and graduate students with needs to:

As a result, our original plan included follow up work tackling issues of digital 3D artwork archival and web based distribution. However, our experience in creating interaction tools in virtual reality, as well with the emerging interst of scientists around us in exploring VR as a platform for data exploration, have led us to naturally extend our work to exploring work spaces that would enable science exploration (and now formal and informal scientific academic instruction) in seamless spaces that uphold a strong sense of presence and carefull consideration to ergonomics, enabling prolonged actual scientific work sessions embelished, fascilitated, and made more effective as intertwined with VR exploration and analysis. studiotool 2014-2016

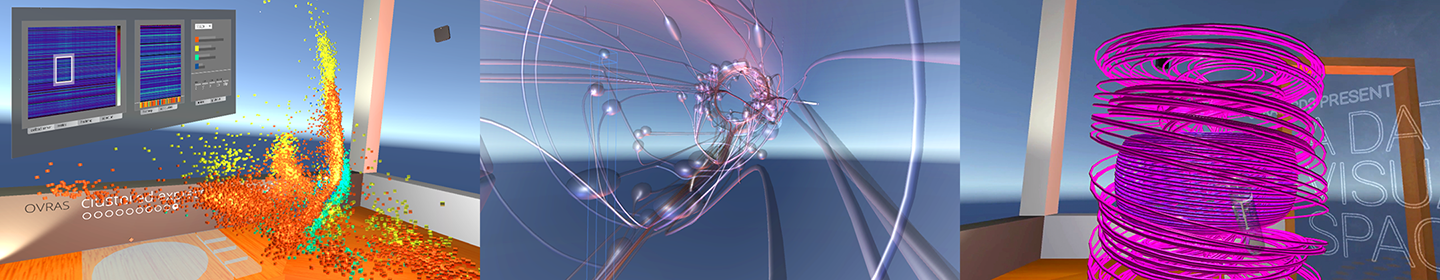

OVRA's studiotool is a Unity3D application which utilizes a development version of the Oculus Rift and a Leap Motion to create a virtual environment where a user can 'paint' in 3d space. Through the use of a simple wand (stylus), with just a pair of buttons, a user can directly interact with the space around them; with an emphasis on creating a stable and safe space, conducive to long sessions of art generation.

|

OVS: Open Visualization Space 2017-presentCaltech's Open Visualization Spaces (OVS) is a simple prototype Virtual Reality environment, built to easily enable researchers around Caltech to 'drop' their data into the system, and seamleslly and quickly be able to easily interact and explore their models. It currently supports obj, fbx, csv (connected lines), csv (3d scatter plots), as well as data exported from Google's tiltbrush. Furthermore, the space the user is immersed in matches the actual physical space, giving a strong sense of presence and stability needed to enable long time extents of productive exploration/analysis/work.

ERT: an Enhanced Reality for Teaching 2018-2019in collaboration with Caltech's Center for Teaching, Learning, & OutreachERT, the Enhanced Reality Teaching space, is a software prototype of a teaching+lab virtual reality environment. We used user experience and interaction design methodologies to design a space that enables university-level science teaching and virtual laboratories. Students to have access to a rich learning and social experience, while empowered with tools for streamlined interaction with the instructor, with other students, and ultimately with 3D scientific models and simulations.

aRvo: Augmented Reality Visualization for Oceanography 2018in collaboration with ArtCenter, and National Academies Keck Futures InitiativeThe Augmented Reality Visualization for Oceonagraphy (aRvo) endeavor's goal is to explore and create an interface that enables scientists to plan out oceanographic scientific instrument deployment --particularly self-driving ocean gliders-- as well as to facilitate realtime inspection, and post-experiment exploratory analysis; all within the context of an interactive 3D projection of the ocean floor layered with instrument data on top of physical surfaces in a researchers natural space. |

participantsresearch collaboratorsdata research collaboratorsstudent collaboratorsAKNOWLEDGEMENTSequipment partially funded by the MOORE-HUFSTEDLER FUND; with hardware donations from HTC|VIVE, MICROSOFT, LOGITECH, and NVIDIA |